Amped Up

Does the notion of artificial intelligence have you conjuring up Hollywood’s images of cyborgs, posing as humans, coming back from the future to change the fate of the world? Or is the mere concept of AI (as scientists refer to it) emblematic of a world in which sentient computers like HAL, from 2001: A Space Odyssey, override the wishes of the humans they’re designed to assist and, while speaking in a soft eerie monotone, diabolically plan the deaths of an entire spaceship crew?

If the androids of Westworld are the pejorative side of AI, heightened by a moviemaker’s poetic license, the truth, in fact, is that artificial intelligence, analogous to the science fiction of yesteryear, is already responsible for bettering the lives of humans in the real world. Artificial intelligence can be found in the electronics of your car, the Roomba that vacuums your home, or those smartphones we’re all tethered to. And, that doesn’t take into consideration thermostats that know when you’re home and adjust the temperature, sophisticated fraud detection alerts on your bank account, or the music or movie recommendations provided to you by Spotify or Netflix.

“The notion of artificial intelligence has been around for a long time, but only recently have we had sufficiently powerful computers to actualize the ideas in this field,” said Ken Ford, who earned a PhD in computer science from Tulane in 1987. Ford is the founder and CEO of the Florida Institute for Human and Machine Cognition in Pensacola, Florida.

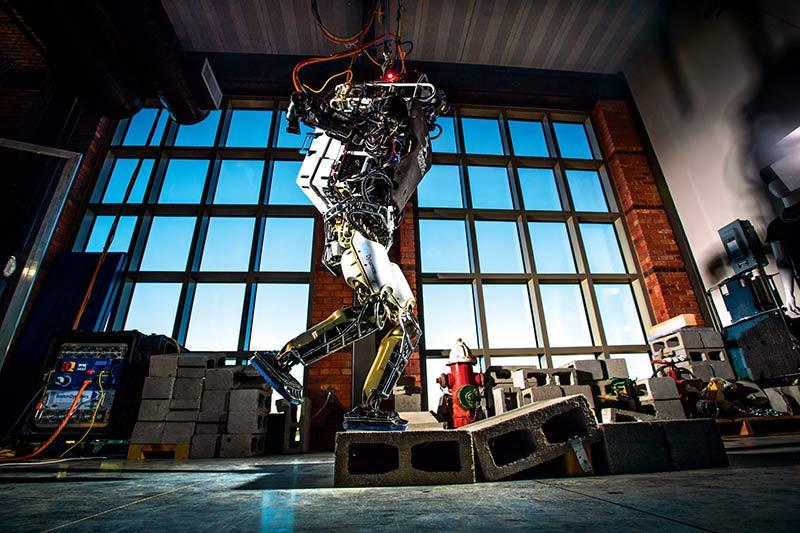

To walk around the institute is to come face-to-face with impressive robotic figures. In fact, Ford’s team from IHMC beat an array of competitors at the international Defense Advanced Research Projects Agency Robotics Challenge in June 2015. It earned first place against American teams, including MIT and Carnegie Mellon, and second place overall, garnering $1 million in prize money.

Ford emphasizes that this institute isn’t about replacing the human, but about amplifying them.

“There are various camps in the AI community,” said Ford. “Some are trying to build machines whose behavior is indistinguishable from humans. That was the original group. IHMC is a reaction to that as we’re not into building artificial humans. They are in good supply, already. And, by the way, the term artificial is a singularly poor name. Perhaps enhanced, augmented or amplified intelligence would be more apropos. Artificial implies something fake.”

To be sure, there’s nothing fake about the sort of intelligence that computers have been imbued with. Witness IBM’s Watson, which beat out the best human contestant to win “Jeopardy,” or the stunning win by the British company DeepMind, whose AI AlphaGo beat the 18-time world champion Lee Sedol at the Chinese board game called Go. It had long been considered an impossible task for computers to play Go at a world-class level.

ETHICS OF SELF-DRIVING CARS

“Artificial intelligence that enables computers to display intelligent behavior comes about by programming in various ways,” said Brent Venable, associate professor of computer science at Tulane, who has a joint appointment with IHMC.

“One can write the rules into a computer, and then it will respond appropriately, or you can show it examples, where it will learn, and improve at tasks, with experience,” she said.

However, expecting a computer to exhibit appropriate reasoning becomes a bit more complex when thrown into the arbitrary world of morals and ethics.

“Autonomous cars are the current hysteria, when it comes to AI,” said Ford. “But, at this point, I feel the media tends to exaggerate what they are capable of. At best, they are not autonomous, but rather self-driving. Self-driving simply means that the car’s computational systems have physical control of the car. I hear the term ‘autonomous cars’ being bantered around, but who wants an autonomous car? If that were the case, the car might say to itself, ‘Ken wants to go get a martini, but I think he’s had too much to drink already, so I’m taking him for a pizza.’”

If this is starting to reflect shades of the inmates running the asylum, we need to remind ourselves that how computers learn, and the inferences they make from the data we feed them, are critical to them making good decisions. With self-driving vehicles, decision-making must take into consideration whose ethics are serving as a template for behavior.

Venable, who formerly worked on NASA’s Mars rover, now has a grant from the Future of Life Institute, funded by Elon Musk, among others, to investigate safety and ethics in self-driving cars, like Musk’s Tesla. She finds the subject of AI at once confounding and fascinating.

“The AI system in a self-driving vehicle has far more information than a human driver,” said Venable. “Because it has 360-degree sensors, it sees things from all perspectives simultaneously. The car also knows how fast the cars around it are going, and can take evasive action in the event of an impending accident, with a lot more information than a human can call up quickly.”

But there’s nothing simple about some of the ethics questions that come into play. Imagine you’re driving down the road, with a passenger in the front seat, when a child suddenly darts out in front of you into the middle of the road, to chase a ball. Your only choices are to hit the child or swerve into a tree, which could injure you and your passenger. How do you program the computer driving the car when these sorts of moral dilemmas arise?

“These are just some of the ethical questions facing the manufacturers of self-driving cars,” said Venable. “There are some situations that a human driver wouldn’t know how to handle either in a split second. Recently Mercedes-Benz announced that the company’s future autonomous vehicles would always protect the passengers inside the car. They got a lot of criticism for that decision, but they feel a need to protect the people who buy their cars.”

Polls show that most people believe the self-driving autos should always make the decision to cause the least number of fatalities, but most people also said they’d only buy one of these cars if it meant their own safety was a priority.

DATA DRIVEN

The IHMC ethics project, which Venable is charged with designing, involves many researchers, including some of her Tulane students. School of Liberal Arts undergraduate and economics major Kyle Bogosian is exploring various ways to balance legal and social issues, by programming in moral philosophy, represented by an algorithm.

“When you entrust a machine with large amounts of data, it’s not possible to override or check every decision that it’s making,” said Bogosian. “However, if it’s programmed correctly, you hope its decisions will fall in line with the ethics you’ve programmed in. At any rate, it’s still more reliable than a human being behind the wheel, who is often distracted, and unable to make good split-second decisions.”

Venable also collaborates with other neuroscientists like Edward Golob, a former Tulane professor, now at the University of Texas–San Antonio. They are determining how our brains specifically function when it comes to predicting auditory responses.

“Let me give you an example,” said Venable. “If you tell me to pick up a pen on my desk, there is a sequence of events that occur. And now, our computation models can tell us how long it takes you to create the concept of what a pen is, then to realize the pen is actually here on the desk, and then tell your brain to pick it up. We’ve now learned that what direction the sound is coming from, in terms of the command to pick up the pen, matters. The brain reacts very quickly to auditory stimuli from the direction where one’s voluntary attention is allocated, say, in front of the person. Then, there’s an auditory black hole on either side. But directly behind you, there’s a strong auditory response, no doubt programmed into our brains evolutionarily, from the days when early man needed to know who or what might be sneaking up behind him.”

Venable’s research assistant, PhD candidate Jaelle Scheuerman, believes the data they’re gathering will be useful in a variety of ways, from cockpit audio in a jetliner, to emergency warning systems.

“Many applications become clearer, once you figure out which sounds are most attention-grabbing,” said Scheuerman, “but trying to understand how people focus their attention is critical when you’re programming artificial intelligence to work with humans.”

Perhaps the most stunning application of AI is in the medical arena, where a helping hand from a well-programmed robot can mean getting research and treatment plans at warp speed.

“Computers,” said Ford at IHMC, “can be trained to read thousands of chapters about cancer pathways, which would take humans an enormous amount of time. A machine can then make inferences from this knowledge, which allows for personalized treatments based on genetics.”

And many feel that in the near future, AI will be reading and interpreting MRIs and recommending treatment, from its vast database of knowledge. But beyond diagnosis and treatment, the computer, through some clever and rather theatrical AI, will soon help Alzheimer’s patients.

“Many Alzheimer’s patients, who in the midst of losing brain function, can be combative, are especially wary of their doctors, so a computer avatar, which looks like a dog, but wears a wig, was devised to interact with the patients, and was deemed to be friendly and funny,” said Ford. IHMC researcher Yorick Wilks leads the dog avatar work, which he began at Oxford University, and is now continuing.

“The patient can actually interact with the computer in a two-way chat,” said Ford. “The patient tells the friendly ‘dog’ that the family went on holiday in Morocco. The ‘dog’ knows what a family is, and knows what a holiday is, and at the lightning speed of a computer, it hooks up to Wikipedia to learn about Morocco, then consults with TripAdvisor to find the most interesting things to do, then replies seamlessly, ‘Oh, did you go to Fes el Bali?’”

Venable added, “It’s only 600 milliseconds between utterances in a dialogue, so these agents must be incredible at computing on the fly, allowing them to engage in normal conversation.”

Venable’s work extends to the science behind recommender systems. If you’ve ever received e-mails from Amazon suggesting what you should buy or watch, these are the preference fields they’ve collected on you, based on past purchases or viewing, to determine what your future choices will be. It may sound like Big Brother is watching your every move, but the technology seems here to stay. Moreover, younger people don’t seem to find this data collection to be invasive.

“The hazard is that as a society, we seem to have traded privacy for convenience,” said Ford, “and perhaps we should complain more about apps collecting information about us without distinct permission. If you ever read the fine print, you’d be amazed. One company nefariously had put in a clause that said, ‘You must give us your first-born child.’ No one read it. But it’s called informed consent. It’s neither ‘informed’ nor ‘consent,’ in any real sense.”

HUMAN INGENUITY

At the Institute for Human and Machine Cognition, there are robots aplenty, but they are a means to an end.

“At IHMC, we don’t sell products,” said Ford. “We do basic and applied science, build prototypes and then license intellectual property, when appropriate. Any technology can be used for good or ill. But machines are the products of human ingenuity, so we shouldn’t feel threatened by them. But interacting with devices at the expense of social interaction with other humans is one of the dangers of this technology. We should figure out the right way to interact with these devices, so that we, as people, are amplified, and not diminished.”

This article originally appeared in the March 2017 issue of Tulane magazine.